People are still debating whether Edward Snowden is a traitor deserving a cage next to Chelsey Manning or a hero deserving praise (hint, unless you believe the latter you’re wrong). But a benefit nobody can deny is the overall improvement to computer security his actions have lead to. In addition to more people using cryptographic tools we are also getting a better idea of what tools work and what tools don’t work:

The NSA also has “major” problems with Truecrypt, a program for encrypting files on computers. Truecrypt’s developers stopped their work on the program last May, prompting speculation about pressures from government agencies. A protocol called Off-the-Record (OTR) for encrypting instant messaging in an end-to-end encryption process also seems to cause the NSA major problems. Both are programs whose source code can be viewed, modified, shared and used by anyone. Experts agree it is far more difficult for intelligence agencies to manipulate open source software programs than many of the closed systems developed by companies like Apple and Microsoft. Since anyone can view free and open source software, it becomes difficult to insert secret back doors without it being noticed. Transcripts of intercepted chats using OTR encryption handed over to the intelligence agency by a partner in Prism — an NSA program that accesses data from at least nine American internet companies such as Google, Facebook and Apple — show that the NSA’s efforts appear to have been thwarted in these cases: “No decrypt available for this OTR message.” This shows that OTR at least sometimes makes communications impossible to read for the NSA.

Things become “catastrophic” for the NSA at level five – when, for example, a subject uses a combination of Tor, another anonymization service, the instant messaging system CSpace and a system for Internet telephony (voice over IP) called ZRTP. This type of combination results in a “near-total loss/lack of insight to target communications, presence,” the NSA document states.

[…]

Also, the “Z” in ZRTP stands for one of its developers, Phil Zimmermann, the same man who created Pretty Good Privacy, which is still the most common encryption program for emails and documents in use today. PGP is more than 20 years old, but apparently it remains too robust for the NSA spies to crack. “No decrypt available for this PGP encrypted message,” a further document viewed by SPIEGEL states of emails the NSA obtained from Yahoo.

So TrueCrypt, OTR, PGP, and ZRTP are all solid protocols to utilize if you want to make the National Security Agency’s (NSA) job of spying on you more difficult. It’s actually fascinating to see that PGP has held up so long. The fact that TrueCrypt is giving the NSA trouble makes the statement of its insecurity issued by the developers more questionable. And people can finally stop claiming that Tor isn’t secure due to the fact it started off as a government project. But all is not well in the world of security. There are some things the NSA has little trouble bypassing:

Even more vulnerable than VPN systems are the supposedly secure connections ordinary Internet users must rely on all the time for Web applications like financial services, e-commerce or accessing webmail accounts. A lay user can recognize these allegedly secure connections by looking at the address bar in his or her Web browser: With these connections, the first letters of the address there are not just http — for Hypertext Transfer Protocol — but https. The “s” stands for “secure”. The problem is that there isn’t really anything secure about them.

[…]

One example is virtual private networks (VPN), which are often used by companies and institutions operating from multiple offices and locations. A VPN theoretically creates a secure tunnel between two points on the Internet. All data is channeled through that tunnel, protected by cryptography. When it comes to the level of privacy offered here, virtual is the right word, too. This is because the NSA operates a large-scale VPN exploitation project to crack large numbers of connections, allowing it to intercept the data exchanged inside the VPN — including, for example, the Greek government’s use of VPNs. The team responsible for the exploitation of those Greek VPN communications consisted of 12 people, according to an NSA document SPIEGEL has seen.

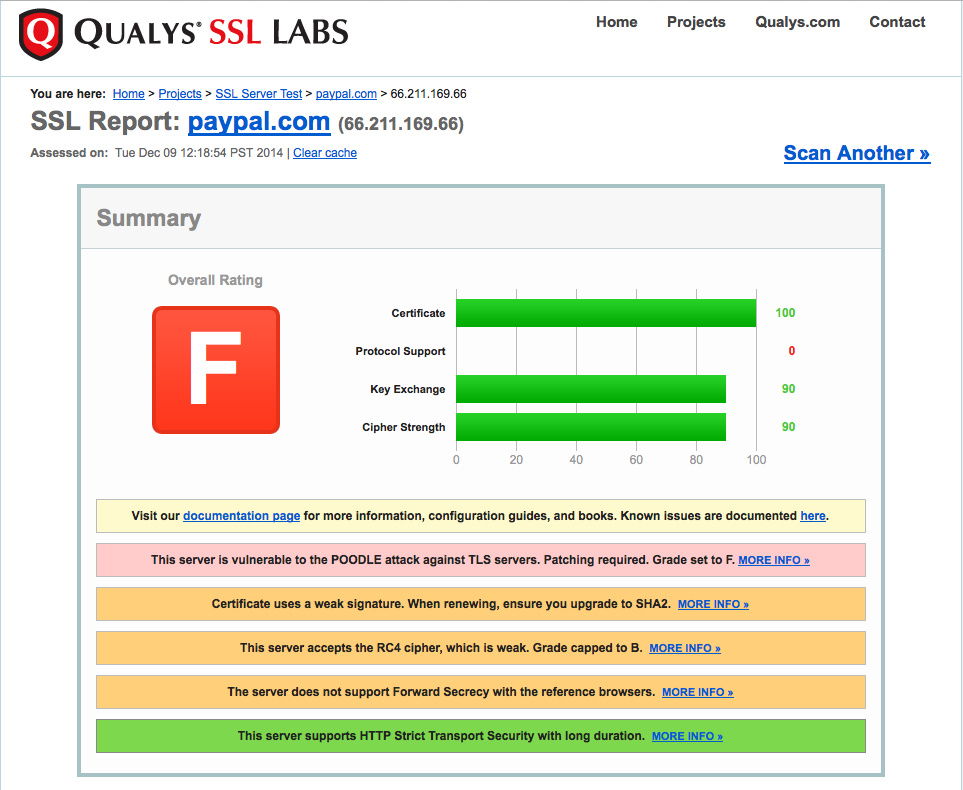

How the NSA is able to bypass VPN and HTTPS is still in question. I’m guessing the NSA’s ability to break HTTPS depends on how it’s implemented. Many sites, including ones such as Paypal, fail to implement HTTPS in a secure manner. This may be an attempt to maintain backward compatibility with older systems or it may be incompetence. Either way they certainly make the NSA’s job easier. VPN, likewise, may be implementation dependent. Most VPN software is fairly complex, which makes configuring it in a secure manner difficult. Like HTTPS, it’s easy to put up a VPN server that’s not secure.

The ultimate result of this information is that the tools we rely on will become more secure as people address the weaknesses being exploited by the NSA. Tools that cannot be improved will be replaced. Regardless of your personal feelins about Edward Snowden’s actions you must admit that they are making the Internet more secure.