GPGTools is a software suite that makes using OpenPGP on macOS easier. I’ve recommended this tool for quite some time to the three people who are interested in encrypting the contents of their e-mail. While the tool was freely available, the development team has been warning users for over a year that the suite would eventually move to a paid model. I completely understand their motivation. A man has to eat after all. However, there are proper ways to change business models and improper ways. The GPGTools team chose the improper way.

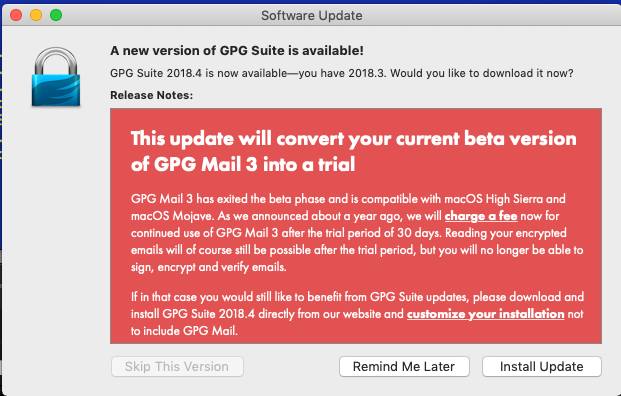

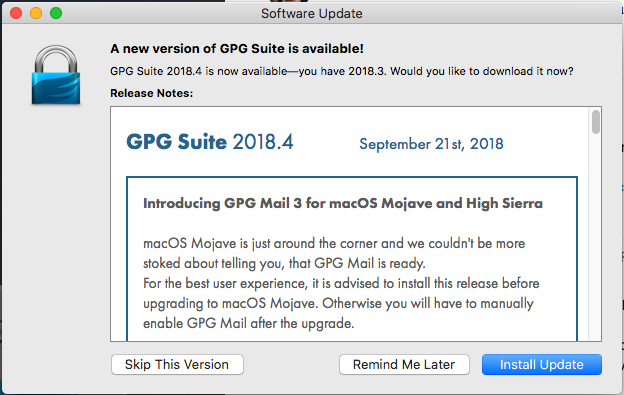

Here is the latest update notification for GPGTools:

It looks innocuous enough but if you install it, you’ll discover that your Mail.app plugin will be a one month trial. The initial screen of the update note doesn’t indicate that this update is the one that moves GPGTools from free to paid. You have to scroll down to learn that tidbit of information. Since most users probably don’t scroll through the entire update note, they will likely be rather surprised when their free app is now telling them that they have to pay.

Another issue with GPGTools’s transition is that there is no English version of the terms of distribution. Since GPGTools is based in Germany, this might not seem odd but everything else on the site is translated into English. If you’re going to toss a license agreement at somebody, you should provide it in every language that your application supports.

The final major problem with the transitions, which has fortunately been fixed now but you can read about it by digging through the announcement thread on Twitter, was that there was no information about the license being sold. When you went to buy a license, the site originally didn’t tell you if the license was per computer, per user, or something else. Now the site states that the purchase covers one person and activation on up to three computers (a limit that I find more restrictive than I prefer).

I’m not one to criticize somebody when they make an effort to profit from their endeavors but GPGTools’s transition from a free suite to a paid suite should be a valuable lesson on how not to perform such a transition.

If you’re ever in a situation where you want to begin charging users for something that you have been providing for free, here are a few rules.

First, don’t foist the change on users out of the blue. Announce your intentions early. Moreover, give your users a firm date as soon as possible. GPGTools’s development team kept saying that the change would come eventually but never provided a hard date.

Second, if you’re going to change the business model through an update, make sure that the update informs users in a very obvious manner. That information should be the first thing in the update note. It wouldn’t hurt to put that part of the note in big bold letters so it jumps out at the user. An even better solution would be to release another free version that told the user that the next version would be the one that transitioned over to a paid model. When the next update was released, have the app clearly tell the user that it will transition the software over to a paid model.

Third, make sure you tell the user what they’re purchasing. The link to buy the software should inform the user if the license is per user, per computer, a monthly subscription, or something else.

Fourth, make any license agreements available in every language that the software supports. If the application is translated into English, then the user should expect an English version of any license agreements to be available.

If anybody is wondering if I’m going to buy a license for GPGTools, the answer is maybe. I haven’t been enamored with the GPGTools development team. Its biggest problem has been a lack of timeliness. Mail.app doesn’t support plugins so the GPGTools plugin requires a fair bit of hackery and often breaks between major macOS releases. GPGTools has often been months behind of major macOS releases, which means that there has often been months where the tool simply doesn’t work if you’re running the current version of macOS. I’m willing to overlook such an issue for a free tool (you get what you pay for) but not a paid tool. So the GPGTools development team will have to demonstrate an ability to have working versions of its software available when new versions of macOS are released before I’ll purchase a license. I also find the three computer limitation too restrictive. I’d rather see it bumped up to at least five computers or better yet unlimited computers (merely make it a per user license agreement).

If the GPGTools development team does resolve these issues, I’ll likely buy a license. It’s only $23.90 (for the current major version, it is implied that a new license will be required for the next major release), which is reasonable. And while I don’t use encrypted e-mail very often (not for lack of want but for lack of people who also use it), I do like to throw money at teams that make quality products and GPGTools, minus the issue noted in the previous paragraph, has been a quality product.