Today’s the 30th anniversary of Pac-Man. To celebrate Google has a new banner up celebrating the holiday except it’s not just a banner is a fully playable Pac-Man game written in JavaScript! Head over to Google and click the insert coin button and play a round while you’re pretending to be searching for something work related.

Tag: Geek Stuff

3D Printers

There are several technologies I absolutely adore and 3D printers are one of them. 3D printers are devices that can make objects from a design file using a series of printer heads to slowly piece the object together from raw material. For instance you could make a Glock pistol frame from strings of plastic by melting the plastic which would be ejected from the printer heads in the desired form. I didn’t realize how far this technology had come along until I found out the made Robert Downy Jr.’s Iron Man armor for Iron Man 2 using such a device.

The suit parts were constructed using 3D printers which made fabrication as simple as designing them on a computer. Likewise they were made to fit Downy’s body making them more comfortable to wear on set.

I got excited about this technology when it was used in Daemon and Freedom(TM) by agents of the Daemon to construct pretty much everything they used. Of course the 3D printers used by Daemon agents were able to use metal dust to construct objects that plastic just wouldn’t work for (such as machine parts for their automated vehicles). Having such devices in your household would be a huge boon. Just imagine being able to construct a 1911 frame out of metal dust (Oh that would make the anti-gunners shit themselves endlessly). Or maybe construct replacement parts for your vehicle. So long as you have raw materials around you could conceivably create anything you want or need.

Currently this technology is pretty pricey although there is a project called MakerBot which is an open specification for creating such machines. MakerBots can currently made almost anything that is within 4″x4″x6″. Obviously that’s not very practical yet but it most certainly will become more advanced and cheaper as time goes on, that’s the benefit of technology.

Frankly this technology is practically limitless in it’s potential. I’m glad to see it’s advancing pretty fast.

The Kindle Isn’t a Textbook Replacement

A few colleges were doing trail runs of the Kindle DX as a mechanism to replace textbooks. Well Princeton’s review wasn’t so hot (in fact it was downright damning) but now Darden is backing Princeton up:

“You must be highly engaged in the classroom every day,’’ says Koenig, and the Kindle is “not flexible enough. … It could be clunky. You can’t move between pages, documents, charts and graphs simply or easily enough compared to the paper alternatives.’’

Yeah I can also confirm this. The Kindle is great for reading novels and other books you go through serially. But it’s not so hot at books where you jump around a lot. The interface and page turning is too slow for such a process. I think e-ink displays will need to advance another couple of years before e-readers will be viable textbook replacements.

Like most technology in its infancy e-ink displays will take some time to become viable general purpose tools.

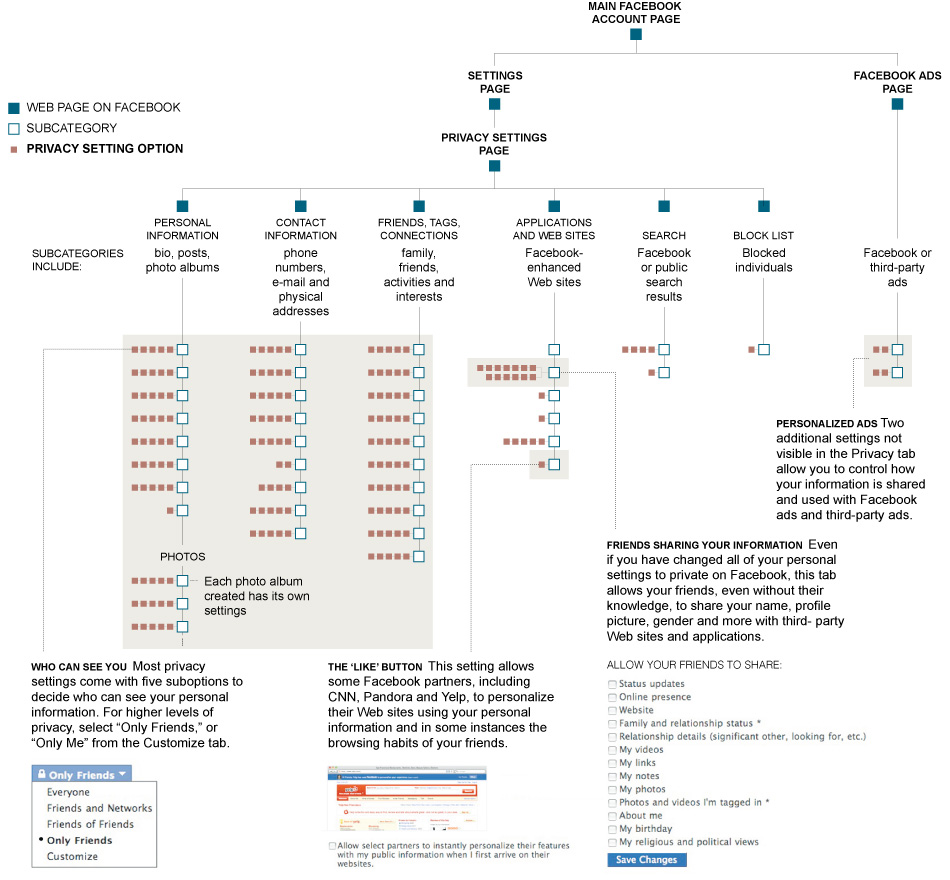

Facebook’s Privacy

There has been a lot of hoopla over Facebook’s privacy practices. Well these two pictures illustrates how simple Facebook’s privacy really is:

See it’s very simple!

Here I Thought They Were Already Doing This

This shows how paranoid I’ve become. MSNBC has a story about Amazon uploading notes and highlights taken on the Kindle is aggregating the data in such a way other people can view it.

Since the Kindle is able to sync things like notes and highlights I already knew they were being uploaded to Amazon’s servers. Likewise since the file storing said notes and highlights is a plain text file I assumed it wasn’t be encrypted. Finally I assumed the data was being sifted through and aggregated at some point. In other words I’m paranoid and trust nobody.

Well apparently Amazon wasn’t really doing anything with the data but will be soon. They’re trying to turn the Kindle into a social network reading device (yeah I just made that up and it’s officially my buzzword, wait this is under Creative Commons… crap). What Amazon is planning on doing is making popular highlights and such available for Kindle books.

If you don’t like this feature there is only one way I know of to disable it, never turn on the wireless card and do a sync operation. Either way you should know about this feature before they implement it and I’m sure Amazon will do everything in their power to not alert anybody of it.

Upcoming Kindle Firmware

I mentioned Amazon is planning on rolling out a new firmware for the Kindle sometime back. Well Ars Technica has a good review of the firmware (lucky dogs got it early).

I have to say overall I’m excited. The categories feature is enough for me to be excited. But it appears as Amazon also implemented a decent password system. If you don’t enter your password properly it pops up a message saying if you forgot your password call Amazon and gives you a number. This leads me to ponder if you have to reset the password remotely. If so it would be a boon for times when the device gets stolen because Amazon could just refuse to reset the device if it’s reported stolen making it a paperweight. On the other hand they would need a mechanism in place to reset the password on Kindles not within range of a cellular data network.

I’m far less excited about the social networking features. Needless to say it uses a URL shortening service (which I talked about today) to post passages from your books on Facebook and Twitter. On the other hand Amazon controls the service so you can be fairly sure (although not completely) the links you see from them are legitimate.

The other feature I’m looking forward to wasn’t covered much which is the ability to zoom and pan PDF files. PDF files don’t scale well on the Kindle’s small screen and are only legible if you put the device in landscape mode. Being able to zoom in and pan will allow you to read PDFs in portrait mode on the regular small Kindle.

Now Amazon just needs to hurry up and release the Kindle Development Kit so I can start writing applications for the bloody device (yes I have ideas for applications for me Kindle).

Ubuntu 10.04

So the new version of Ubuntu was released a short while ago. It’s now at version 10.04 also called ludicrous lackey or something like that. Anyways I’m already fighting with it inside the confines of a virtual machine. Here’s a very quick bullet point of what I noticed:

- The new theme is ugly as sin

- Now that the window control buttons have been moved to the left side (think Mac OS) the left side of the window feels cluttered (window controls plus the menu bar on the same side)

- The problem of installing Eclipse from Ubuntu’s software repositories not including several update URLs inside of Eclipse hasn’t been fixed

More to come as I experience it.

Hollywood Computers

We’ve all seen movies where the main star creates a computer virus by aligning three dimensional cubes on a 10 monitor display in order to create a super virus to destroy the bad guys’ computers. Hollywood believe computers are magic and I found a good list of Hollywood’s favorite computer sorcery. My pet peeve is on there:

3. You can zoom and enhance any footage

This has long been the staple of the lazy writer (particularly those working for CSI): a security camera or photo is put on a screen, someone asks for zone G4 to be zoomed and enhanced, then as if by magic stunning detail appears from nowhere and the criminal is identified.

For this system to work it either requires every camera and CCTV system to use Gigapixel resolutions, or such incredible computing technology that Hollywood could throw away all of its expensive HD cameras and shoot everything using £50 camcorders.

As we all know, all zooming into a poor-quality image would do is give a muddled blurry mess on the screen. This technique was recently brilliantly parried in Red Dwarf.

Next Verion of WebOS Coming Soon

Well according to an e-mail I received today a new version of WebOS is probably on the horizion:

The next version of webOS is coming soon.

You will receive an email alert in early May announcing the availability of an SDK release candidate. Please be prepared to begin testing your apps right away.

Because the scope of the changes in this update is limited, we won’t be going through a full SDK beta cycle:

You will have approximately one week to report show-stopper bugs in webOS before it is released to carriers.

Once the build has been released to carriers, you will have another 2-3 weeks to address app-level bugs before the update lands on consumer devices.

It is especially important to test PDK apps against this release candidate. Developers of non-PDK apps should also test their apps to catch any unanticipated issues.

I wonder if it will have HP’s branding on it by then.

Intel’s Core i5 and i7 Processors

OK everybody it’s super geek time here on A Geek With Guns. If you’re doctor has warned you to avoid discussion of computer hardware this post should be ignored. Otherwise proceed with caution.

Intel recently released new processors dubbed the i5 and i7 series. One of the new features of these processors is mandatory integrated graphics core. Needless to say integrated graphics are hated by anybody who does any graphical work so whining has crept up over Intel’s decision. The processors do support switchable graphics units meaning you can seamlessly switch between the integrated graphics core and another graphics processing unit on the system so really it’s a non-issue. But alas people are curious why Intel decided to include integrated graphics as a mandatory option instead of an optional feature. When you look into it having an on board graphics core makes a lot of sense.

Graphics processing units perform better at certain tasks than standard processing units. It used to be GPUs were only used for 3D games and hence only gamers really card what one they had in their system. Alas GPUs are useful for a great deal of things such as video encoding and decoding.

When writing applications generally a programmer writes in a programming language and uses a mechanism (compiler, interpreter, virtual machine, etc.) to convert said language into something the computer actually understands. In the case of compilers a programming language is converted into machine language (simplified explanation). Different CPUs have different instructions available to them and oftentimes developers are forced to compile their applications to the lowest common denominator (an instruction set available on as many CPUs as possible). This means their applications aren’t taking advantage of the best hardware available when it is available.

Having an integrated GPU ensures instructions specific to GPUs will always be there and programming and write their application with this in mind. Granted right now there is no guarantee with all the older processors out there not having integrated graphics, but in time old systems will become the minority and it will be easier to not have to support them.

Intel didn’t include the integrated GPU for monopolistic reasons. If that were the reason I believe they would have made it more difficult to switch to an external graphics card. Intel wanted to ensure the hardware was available on as many systems as possible for doing work made faster by a GPU.